Data Architect

Subscribe to the newsletter

In the rapidly evolving world of data engineering, the emergence of Large Language Models (LLMs) is revolutionizing traditional processes. These advanced AI systems are not only transforming how data is extracted, cleaned, and transformed but also redefining the role of data engineers. From accelerating ETL development to enhancing data quality, LLMs introduce a level of efficiency and innovation previously unseen.

This blog delves into how traditional data engineering efforts are changing with the integration of LLMs, using an engaging narrative that follows a data engineer’s journey to harness the power of automation and intelligent frameworks.

Building the foundation of data transformation: A data engineer’s journey

In the vast and dynamic kingdom of DataLand, a determined data engineer named Goofy undertook a noble mission: to design a sophisticated and resilient data model that would become the cornerstone of the kingdom’s data pipeline. This endeavor aimed to transform raw data into valuable insights, empowering analysts and decision-makers throughout the realm.

The vision

Goofy’s goal was clear: to create a data model capable of handling the kingdom’s growing needs while ensuring accuracy, scalability, and efficiency. To accomplish this, Goofy relied on a comprehensive framework that included:

- Business Requirements Documents (BRD): To capture the kingdom’s needs and goals.

- KPI definition guidelines: To align the model with key performance indicators critical for the kingdom’s success.

The power of LLM assistance

Recognizing the complexities of the task, Goofy utilized Large Language Model (LLM) assistance to streamline the process. With LLM’s advanced capabilities, Goofy was able to enhance collaboration, automate repetitive tasks, and ensure precision in building the data model.

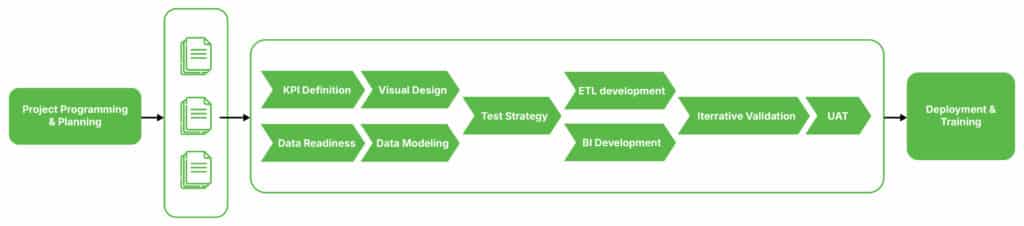

To achieve success, Goofy followed a structured delivery model, with tailored support provided at each phase of the journey. This systematic approach ensured that every aspect of the data model was thoughtfully planned and executed.

The call to adventure: Defining data transformation

Goofy’s mission was no small feat. It required:

- Defining detailed data transformation rules

- Adhering to stringent data quality standards

- Developing a sophisticated data model is essential for the future of DataLand’s analytics

The integrity and reliability of the data model were crucial for empowering analysts and decision-makers throughout the realm.

Discovery of the magical artifacts: Source Matrix (SMX) and documentation

As Goofy navigated the complex landscape of ETL (Extract, Transform, Load) development, he encountered two powerful artifacts.

1: The Source Matrix (SMX)

A cornerstone of the data engineering process, the SMX serves as a comprehensive blueprint that defines how data should be transformed, mapped, and validated throughout the pipeline. It ensures consistency, transparency, and ease of maintenance. The Source Matrix includes:

- Transformation rules: Guidelines for converting raw data into its refined state.

- Mapping schema: A blueprint mapping source fields to target destinations.

- Validation dimensions: Six principles ensuring data quality:

- Completeness: Every record must be accounted for.

- Uniqueness: Eliminate duplicate records.

- Consistency: Data values remain uniform across sources.

- Validity: Data adheres to predetermined standards.

- Accuracy: Data represents the truth of the real world.

- Timeliness: Data is relevant and up-to-date.

2: Supporting documentation

Documents like the Business Requirements Document (BRD) and KPI definitions provided critical context for the data model’s purpose and requirements.

Seeking the LLM wizards’ aid

Recognizing the enormity of the task, Goofy sought the guidance of the LLM Wizards, each possessing unique skills vital to the mission:

1: Ingestor: Extracting raw data from various sources

With Ingestor’s expertise, Goofy successfully retrieved streams of raw data from diverse sources, ensuring the foundation for the pipeline was solid.

2: Cleaner: Ensuring data adheres to SMX-defined rules.

Cleaner meticulously applied the formatting rules outlined in the SMX, preparing the data with unmatched precision for subsequent transformations.

3: Transformer: Automating transformation scripts based on SMX mappings.

Armed with SMX’s detailed mappings, Transformer used precise prompts to generate efficient, ready-to-implement transformation scripts.

For example:

“Transform the customer_id field from the source database to the client_identifier field in the target database, ensuring uniqueness as specified in the SMX.”

4: Validator: Conducting automated quality checks aligned with validation dimensions.

On the completion of transformation, Validator ensured data integrity by running rigorous automated checks against six validation dimensions: Completeness, Uniqueness, Consistency, Validity, Accuracy, and Timeliness. Any discrepancies were flagged, accompanied by clear corrective measures to maintain quality.

The delivery model reinvented

During the quest, Goofy uncovered a structured delivery model and integrated the LLM Wizards into each phase to enhance efficiency. This evolved into a three-phase rapid prototyping framework:

During the quest, Goofy uncovered a structured delivery model and integrated the LLM Wizards into each phase to enhance efficiency. This evolved into a three-phase rapid prototyping framework:

- Build: Collaborate with LLM Wizards to create transformation scripts based on the SMX.

- Validate: Use Validator for automated quality checks.

- Optimize: Refine transformation rules and implement continuous improvements.

By embedding LLM-powered automation into the delivery model, Goofy redefined data engineering processes:

- Discovery (BRD Analysis): LLMs summarized large BRDs into actionable requirements.

- Technical design: Swiftly crafted architecture diagrams and solution blueprints.

- Data readiness: Cleaner ensured data adhered to predefined SMX rules.

- ETL development: Transformer automated code generation.

- Validation: Validator performed automated testing for data quality compliance.

- Deployment & training: LLMs generated user manuals, guides, and training content.

Conclusion: The power of collaboration

Goofy’s journey underscored the importance of uniting structured frameworks like the Source Matrix with the powerful capabilities of LLM tools. By melding automation, validation, and iterative refinement, data engineers can achieve excellence while upholding the highest standards of data quality.

Interested in learning more? Reach out to us at marketing@confiz.com and discover how our expertise in data and AI can help you achieve data-driven success.