Senior Data Scientist

Subscribe to the newsletter

In the race to leverage Generative AI solutions for innovation, most organizations focus on the output – the text, code, images, and insights these models generate. But behind the scenes, a more pressing challenge emerges: how do we ensure these AI systems remain reliable, cost-effective, and aligned with expectations?

Unlike traditional software, generative AI models don’t just execute predefined instructions, they generate new content dynamically, adapting to context, learning from patterns, and sometimes even producing unpredictable results. This makes them both powerful and difficult to manage. Issues like hallucinations, performance degradation, prompt sensitivity, and skyrocketing usage costs can silently impact applications, reducing their reliability and trustworthiness.

This is where automated observability comes in. Unlike basic monitoring, which passively tracks system health, observability provides deep, real-time insights into how and why an AI system behaves the way it does. It enables teams to detect, diagnose, and resolve issues before they spiral into major failure, keeping generative AI applications efficient, ethical, and scalable.

Through this blog post, we will dive deep into what makes observability an important aspect to developers and data scientists and explore critical LLM observability tools that ensure your AI-driven solutions stay performant, trustworthy, and cost-efficient in the age of generative AI.

What is Automated Observability in generative AI?

Automated observability is the practice of using automated systems, often powered by Machine Learning and AI itself, to continuously monitor and analyze the behavior of a generative AI model, providing insights into its performance, identifying potential issues, and proactively optimizing its operations without manual intervention.

Observability offers deeper insights and helps understand the root causes of problems identified through monitoring. While monitoring is the first line of defense that continuously monitors the system’s state, observability allows for deeper analysis when anomalies or performance issues arise. Together, observability and monitoring ensure that LLM applications are secure, reliable, and cost-efficient. To implement observability, organizations should track:

- Cost: Cost of using LLM model

- Context window: Size and content of context window for each LLM call.

- Latency: Time taken to generate a response.

- Throughput: Number of requests processed per unit time.

- Error rates: Frequency of failures or incorrect outputs.

- Model drift: Changes in model performance over time.

- Resource utilization: CPU, GPU, and memory usage.

Quick read: Setting up an in-house LLM platform: Best practices for optimal performance.

Why does observability matter in generative AI?

Generative AI is revolutionizing the way we create content, automate workflows, and enhance user interactions, making processes more efficient and innovative. As these models grow more advanced and deeply integrated into business operations, they also become more complex and unpredictable. This raises a critical question—how do we ensure these generative AI systems generate accurate, relevant, and safe outputs consistently?

The key lies in having visibility into how these models function in real-world scenarios. Without a way to monitor their behavior, businesses risk performance slowdowns, escalating costs, unreliable outputs, and compliance challenges. Generative AI observability bridges this gap by providing continuous insights into model performance, efficiency, and decision-making processes, ensuring that AI-driven systems remain optimized, transparent, and reliable.

Let’s walk through why observability is essential for managing and scaling generative AI effectively.

Improved LLM application performance

Generative AI applications rely on Large Language Models (LLMs) to produce high-quality responses quickly and accurately. Continuous monitoring and optimization can enhance the overall performance of LLMs, leading to faster and more accurate results.

Efficient cost management

LLMs are resource-intensive, often consuming significant computational power and cloud storage, leading to high operational costs. Optimizing resource usage and early detection of inefficient processes can reduce operating costs.

Better explainability

One of the biggest challenges with LLMs is their hidden functionality, making it difficult to understand how and why they generate certain responses. A deeper understanding of LLMs’ internal operations allows companies to better explain and justify the models’ decisions, which is particularly important in regulated industries.

Increased reliability

Generative AI systems can sometimes produce hallucinations, biased responses, or misleading information, which can harm user trust and business credibility. Proactive monitoring and maintenance can detect and address potential issues early (like hallucinations), enhancing the reliability of LLMs.

Observability in action: Must-have tools for AI performance

When deploying generative AI, ensuring consistent performance, reliability, and efficiency is just as important as developing the model itself. AI systems can sometimes behave unpredictably, generating inaccurate outputs, experiencing latency issues, or consuming excessive resources. Without the right level of visibility, identifying and resolving these challenges becomes difficult, leading to inefficiencies and potential risks.

Generative AI observability helps bridge this gap by providing real-time insights into AI behavior, enabling proactive monitoring and optimization. Let’s explore two key LLM observability tools that help organizations gain better control over their generative AI systems, ensuring they operate smoothly and deliver high-quality results.

- Langfuse

- AgentOps

Langfuse

Langfuse is a monitoring and observability tool specifically developed for tracking and analyzing the performance of LLMs. It offers comprehensive functions for capturing and analyzing logs, metrics, and traces. Langfuse enables detailed insights into the functioning of LLMs and early detection of potential issues.

Why Langfuse

- Most used open-source LLMOps platform.

- Model and framework agnostic.

- Built for production.

- API-first, all features are available via API for custom integrations.

- Optionally, Langfuse can be easily self-hosted.

Key features

- Full context: Capture the complete execution flow including API calls, context, prompts, parallelism and more

- User tracking: Add your own identifiers to inspect traces from specific users

- Cost tracking: Monitor model usage and costs across your application

- Quality insights: Collect user feedback and identify low-quality outputs

- Framework support: Integrated with popular frameworks like OpenAI SDK, Langchain, and LlamaIndex

- Multi-modal: Support for tracing text, images and other modalities

Let’s take a closer look at some of the key features of Langfuse.

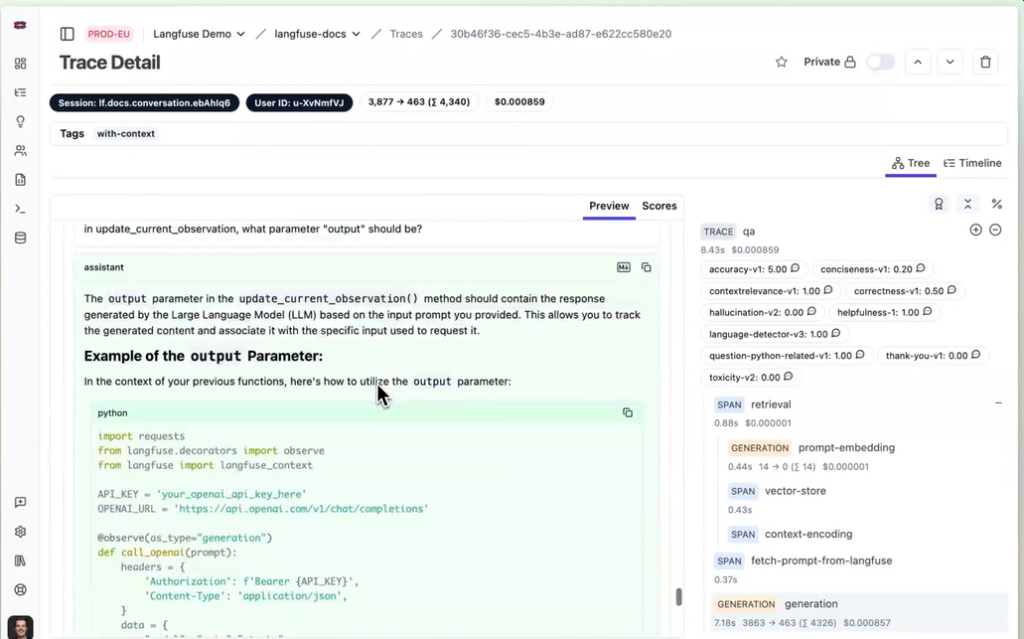

- Trace monitoring

Traces allow you to track every LLM call and other relevant logic in your app.

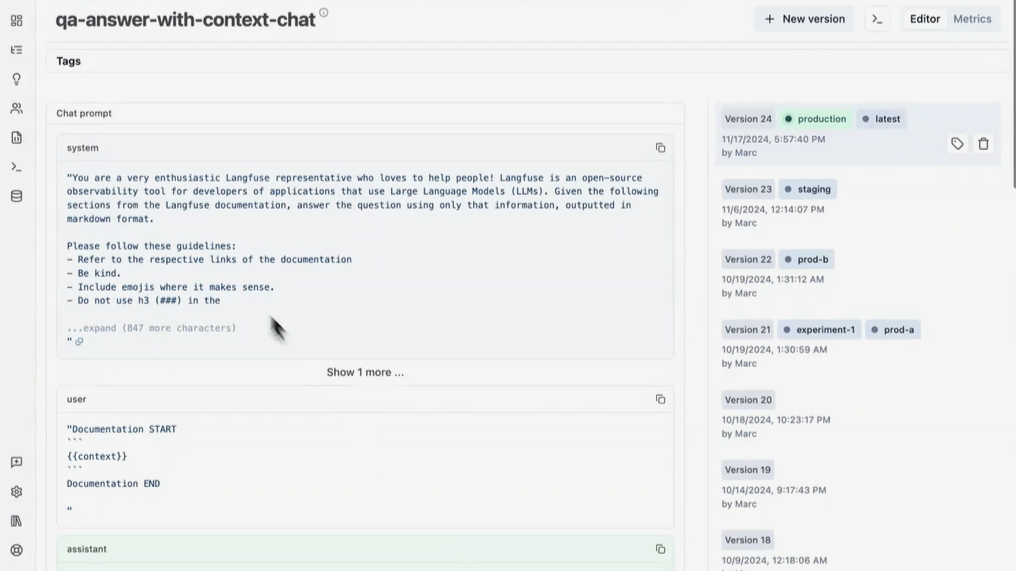

2. Prompt Management

Langfuse’s prompt management allows you to centrally control, version, and collaboratively refine your prompts.

Key benefits

- Decoupled from code: Deploy new prompts without needing to redeploy the application.

- Version control: Track changes and quickly revert to previous versions when necessary.

- Multi-format support: Works seamlessly with both text and chat prompts.

- Non-technical friendly: Business users can easily update prompts via the Console.

3. Other features

- Evaluations: Evaluations are the most important part of the LLM Application development workflow. Langfuse provides fully managed evaluators run on production or development traces, get user feedback or annotate traces with human feedback and allow user to build custom evaluation pipelines.

- Datasets: Langfuse provides many datasets using which you can create test sets and enchmarks to evaluate the performance of your LLM application.

- Playgrounds: The LLM Playground is a tool for testing and iterating on your prompts and model configurations, shortening the feedback loop and accelerating development.

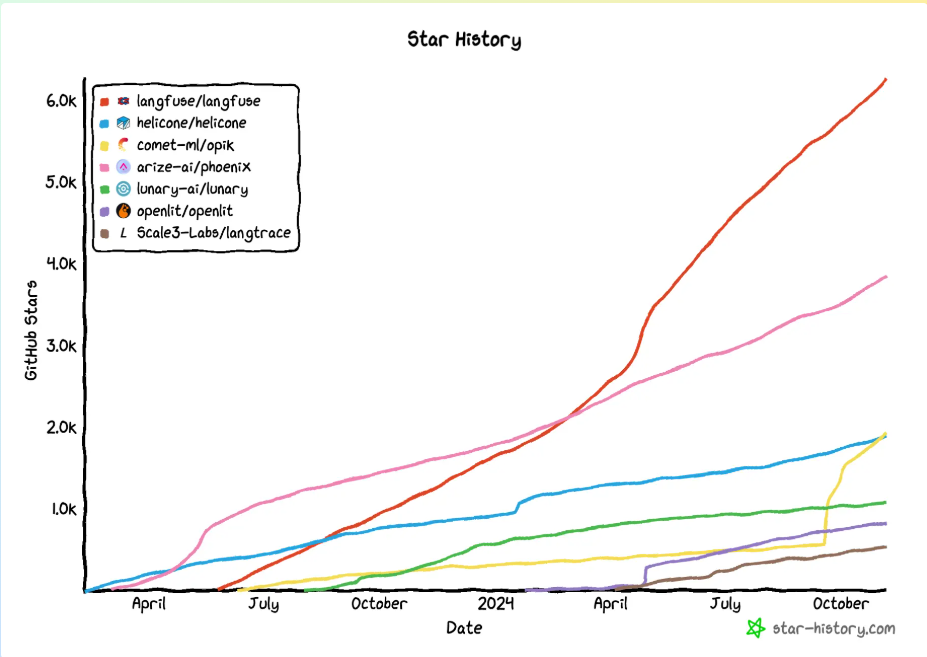

4. Popularity

Langfuse is the most starred open source LLMOps tool across GitHub and the fastest growing since its launch in June 2023.

More insights: AI-driven DevOps: How gen AI is shaping the future of software engineering.

AgentOps

AgentOps was created by the developers of AutoGen. AgentOps is another AI observability tool that helps developers build, evaluate, and monitor AI agents. From prototype to production. It can track agents across executions, record LLM prompts, completions, cost & timestamps. It also logs errors and link errors back to their causal event. AgentOps allows integration of top agentic frameworks like Crew Ai, Llama index, AutoGPT , AutoGen, Langchain, Cohere etc.

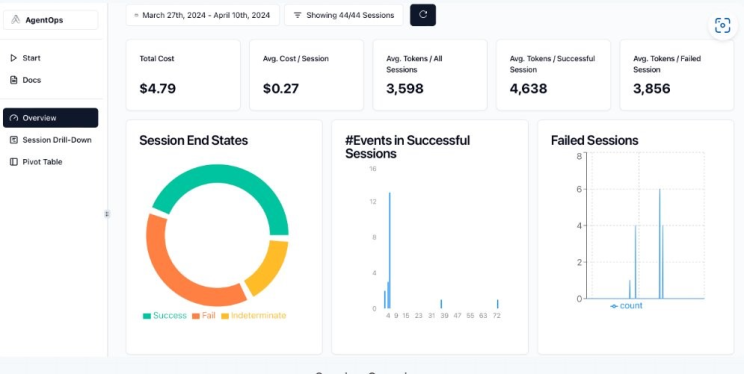

With just two lines of code, you can free yourself from the chains of the terminal and, instead, visualize your agents’ behavior in your AgentOps Dashboard. After setting up AgentOps, each execution of your program is recorded as a session and the data is automatically recorded for you.

- Main views

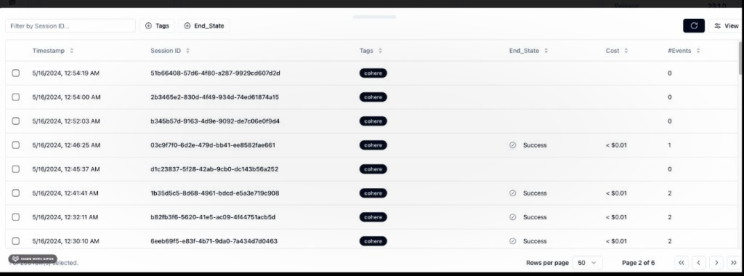

Session overview

View a meta-analysis of all your sessions in a single view. In this view, we can check total cost, average cost per session, average number of tokens per session or per successful session or per failed session along with some other stats.

Session drilldown

List of all of your previously recorded sessions and useful data about each such as total execution time, chat history view, and charts give you a breakdown of the types of events that were called and how long they took.

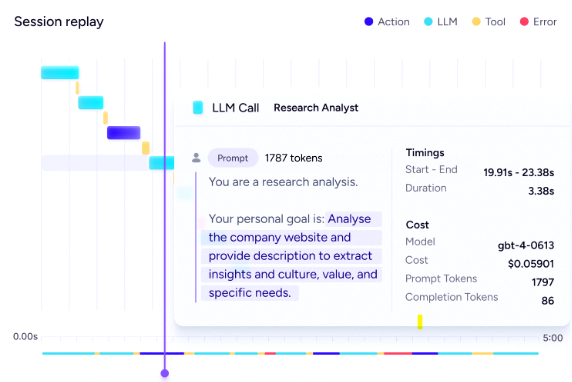

Session replay

Most powerful of all is the Session Replay. On the left, a time visualization of all your LLM calls, Action events, Tool calls, and Errors. On the right, specific details about the event you’ve selected on the waterfall. For instance, the exact prompt and completion for a given LLM call. Most of which has been automatically recorded for you.

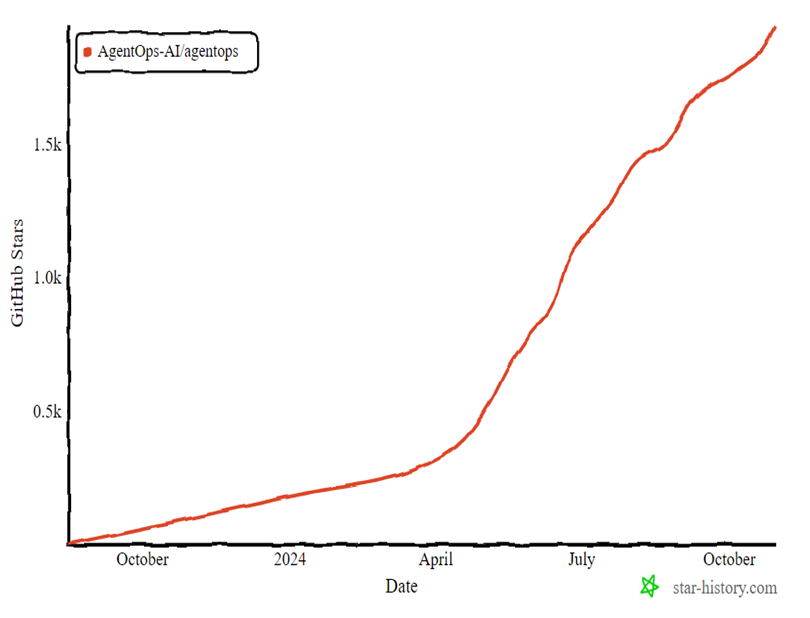

2. Popularity

AgentOps’ popularity has been growing rapidly since April 2024. The AgentOps team is actively adding new features and enhancing user-friendliness, which will undoubtedly contribute to its continued rise in popularity.

Conclusion

Determining which tool is better isn’t straightforward. Both Langfuse and AgentOps offer valuable features for observing LLM applications. Langfuse is one of the most widely used tools and is compatible with nearly all major GenAI frameworks. On the other hand, AgentOps may be a better choice if you’re working with AutoGen.

Ultimately, the best choice depends on your AI infrastructure, workflow requirements, and long-term scalability goals. Investing in the right observability tool ensures that your AI systems remain efficient, cost-effective, and trustworthy. Looking to enhance your AI observability strategy? Reach out to us at marketing@confiz.com for expert insights on choosing the right solution for your business.