Data & AI Evangelist

Subscribe to the newsletter

Did you know that a whopping 77% of business executives believe generative AI will have a bigger impact than any other technology over the next 3-5 years? Gen AI tools like ChatGPT, Google Gemini, and Microsoft Copilot are changing the game from speeding up code-writing and generating content to simplifying daily tasks. But with great power comes great responsibility and one big, raising a burning question: What’s ethical and what’s not when it comes to using generative AI?

Adding to the urgency, a recent study reveals that 56% of business executives are either unaware or unsure if their organizations even have ethical guidelines for using generative AI. This shocking statistic exposes a major gap in understanding and preparation, signaling a clear call to action for businesses to take generative AI ethics seriously in the age of data and AI.

In this blog, we’ll break down what AI ethics really mean, cover the five pillars of the ethics of generative AI, and share how your business can set up for success in this new era. Keep reading to get the full picture.

What is AI ethics? The moral compass for generative AI development

Ethics in AI refers to the guidelines and principles that govern the development and use of artificial intelligence in a way that is fair, transparent, accountable, and beneficial to society. As AI technology continues to evolve rapidly, the ethics of AI ensure that AI systems operate responsibly, avoiding harm and respecting fundamental human rights. These principles cover everything from the way data is collected and used to the potential societal impact of deploying AI technologies. Key areas of concern include privacy, fairness, accountability, and bias prevention.

AI governance plays a critical role in establishing frameworks and policies that uphold ethics in AI. Responsible AI governance ensures that AI systems are designed to be transparent so users understand how decisions are made and explainable so outcomes can be scrutinized and trusted. Additionally, it calls for a commitment to inclusivity, ensuring that AI technologies do not disproportionately disadvantage any particular group. In a nutshell, AI ethics and governance aim to balance technological advancement with societal well-being, creating solutions that enhance human life without causing unintended consequences.

Further reading: Gain trust and transparency with data governance in the age of generative AI.

Why is it important to consider ethics when using generative AI?

Ethics in AI has become a critical concern for organizations, as generative AI and similar technologies have far-reaching impacts on individuals, businesses, and society. International regulations like GDPR (General Data Protection Regulation), CCPA (California Consumer Privacy Act), UNESCO recommendations on the ethics of AI, OECD AI Principles, and WHO guidance on AI ethics play a crucial role in shaping the ethical landscape of generative AI. These laws emphasize the protection of personal data, transparency, and accountability, ensuring that AI systems respect privacy rights and prevent misuse.

Ethical considerations of AI help ensure responsible use, foster trust, and minimize unintended harm. Here are the key reasons why ethics matter in generative AI:

- Preventing harm: Generative AI can produce misinformation, biased content, or harmful outputs. Ethical guidelines mitigate these risks and protect users from negative consequences.

- Ensuring fairness: AI systems can unintentionally perpetuate or amplify biases present in training data. Ethical practices promote fairness and inclusivity by addressing these biases.

- Building trust: Transparent and ethical use of AI fosters trust among users, stakeholders, and the public, ensuring the technology is accepted and adopted responsibly.

- Protecting privacy: Generative AI often processes vast amounts of data, raising privacy concerns. Ethical considerations ensure that data is handled securely and that it respects user consent.

- Promoting accountability: Clear ethical standards help define accountability, ensuring developers and organizations take responsibility for AI’s outcomes and impacts.

- Avoiding misuse: Generative AI can be misused for malicious purposes, such as creating deepfakes or spam. Ethical use helps prevent such exploitation.

- Supporting long-term benefits: Ethical AI practices prioritize sustainable development and align with societal values, ensuring that advancements benefit humanity as a whole.

5 foundational pillars for building a responsible generative AI model

Recognizing the need for standards in the ethical use of generative AI is the first step toward responsible implementation. It’s essential to ensure this powerful technology drives positive change for businesses and society while minimizing unintended harm. The ethical considerations when using generative AI demand a proactive approach to identifying and addressing potential challenges before they evolve into real-world issues.

The second step is creating robust policies to guide ethical AI use. This involves understanding the foundational models behind generative AI and building frameworks that align with ethical principles. But what are the pillars of AI ethics that serve as the foundation for responsible practices?

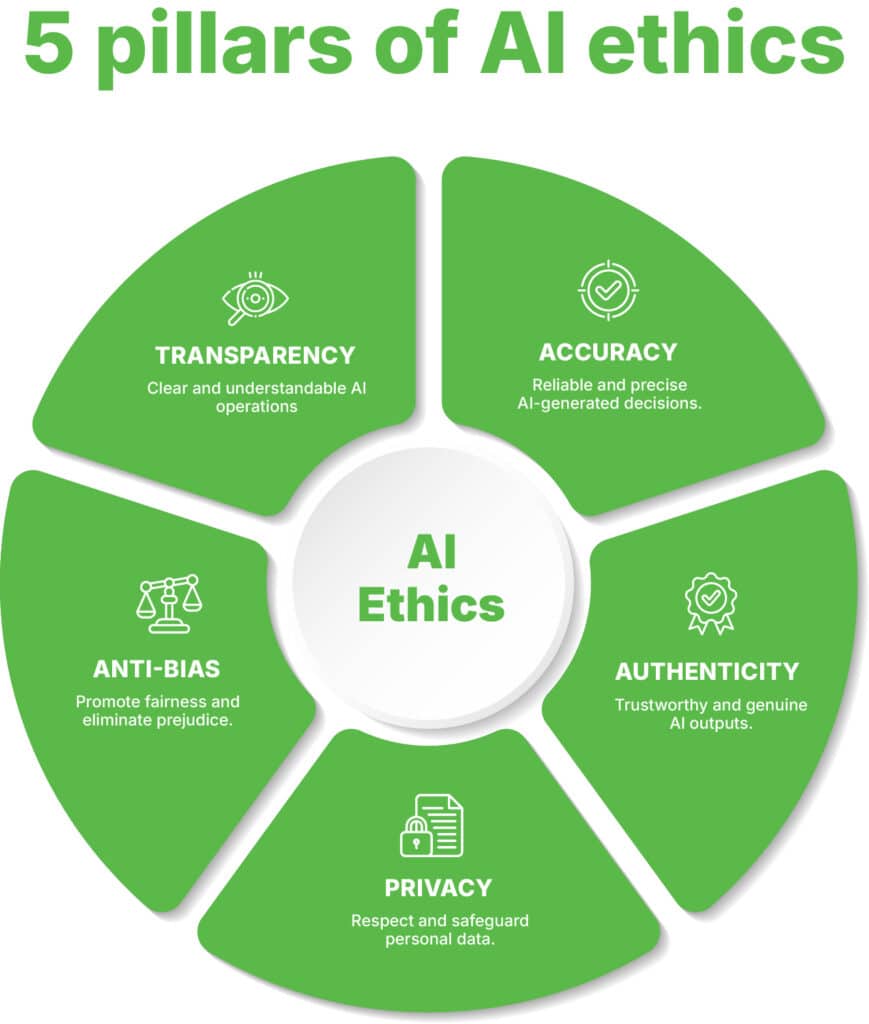

At the heart of ethical AI are five key pillars:

Let’s explore how each of these principles forms the foundation for generative AI ethics, highlighting the responsibility of developers using generative AI.

Accuracy

Accuracy is paramount when it comes to building generative AI models. With the existing generative AI concerns around misinformation, engineers should prioritize accuracy and truthfulness when designing gen AI solutions. Developers must strive to create models that produce outputs that are not only relevant but also factual and contextually appropriate. This involves:

- Rigorous testing to measure how well the model performs against a set of known benchmarks.

- Data quality to train your model on high-quality, well-annotated data to minimize errors.

Authenticity

We live in an era where generative AI has blurred the lines between real and synthetic, creating a world where text, images, and videos can be convincingly faked. This new reality makes it more critical than ever to build generative AI models that can be trusted to deliver genuine, meaningful content. The generative AI model goals should be aligned with responsible content generation. Avoid enabling uses that can deceive or manipulate people, such as creating deepfakes or spreading misinformation.

Engineers have a responsibility to ensure that what their models create upholds the integrity and authenticity we rely on with various solutions, such as Deepfake detection algorithms, Retrieval Augmented Generation (RAG), or Digital watermarking, etc.

Privacy

Generative AI models have heightened concerns around data consent and copyrights, but one area where developers can make a real impact is by prioritizing user data privacy. Models trained on personal information come with significant risks: a single data breach or misuse can spark legal consequences and shatter user trust, a foundation that no successful AI system can afford to lose. Therefore, developers should consider:

- Data anonymization

Make user anonymity your default. Before training your models, ensure personal data is stripped of identifiable information. This way, you’re protecting user privacy while still leveraging valuable insights with data anonymization techniques.

- Data minimization

Follow principles like GDPR’s data minimization, which call for processing only what’s absolutely necessary. By collecting minimal data, you not only enhance privacy but also simplify compliance with data regulations.

Anti-bias

Generative models are only as fair as the data they learn from. If fed biased information, they will inadvertently perpetuate or even amplify societal biases, which can lead to public backlash, legal repercussions, and damage to a brand’s reputation. Unchecked bias can compromise fairness, trust, and even human rights. That’s why building bias-free AI requires periodic audits to ensure your generative AI model evolves responsibly.

To build responsible models, developers must use bias detection and mitigation techniques (adversarial training and diverse training data) both before and during training to actively identify and reduce inequalities in generative AI models.

Transparency

When it comes to building generative AI models, achieving transparency is the foundation of trust. Without it, users are left in the dark, unable to fact-check or evaluate AI-produced content effectively. To build trust and accountability, AI systems must be open and clear about how they operate.

To build trust, developers should consider taking a few measures to boost transparency in generative AI solutions, such as:

- Design models that can explain their decision-making processes in a way that users can easily understand. Use interpretable algorithms and provide clear documentation outlining how your model works, including its limitations and areas of uncertainty.

- Be upfront about when and how generative AI is used, especially in contexts where it could mislead, such as automated content generation or AI-driven recommendations.

More insights: How to start your generative AI journey: A roadmap to success.

Responsible use of generative AI: How to set up your business for ethical generative AI use

Although generative AI brings incredible opportunities for businesses, using it responsibly takes more than just ticking boxes. It’s about understanding the ethics of AI in business and making thoughtful choices that build trust with your customers, employees, and stakeholders, all while keeping potential risks in check. Let’s explore some key strategies to help your business use generative AI in a way that’s both ethical and impactful:

Get clear on your purpose

Before getting started right away with generative AI, start by pinpointing exactly how your business plans to use it. Will it help generate content, improve product development, or streamline customer service? Defining your use cases upfront not only sharpens your strategy but also ensures you can align your AI initiatives with ethical principles from the get-go.

Set the bar high with quality standards

Don’t leave the quality of your generative AI outputs to chance, set clear, high standards from the start. Think about what matters most: accuracy, inclusivity, fairness, or even how well the AI matches your brand’s tone and style. Regularly review and fine-tune your AI’s performance and be ready to step in and retrain it as needed. After all, ethical AI use means keeping a close eye on what your technology is producing and making continuous improvements.

Establish company-wide AI guidelines

Make sure everyone in your organization is on the same page when it comes to the responsible use of generative AI. Develop clear, comprehensive AI policies that apply across all teams and departments. Cover everything from ethical principles and data privacy to transparency, compliance, and strategies for minimizing bias. By creating a unified playbook, you’ll promote professional integrity and help ensure that your generative AI practices are ethical and consistent throughout the company.

Cultivate a culture of responsibility

Make ethics a team sport! Encourage open discussions about the risks and rewards of generative AI and involve your team in shaping ethical practices. Making ethics part of your culture empowers everyone to use AI thoughtfully and responsibly. When everyone feels empowered to contribute, your business becomes better equipped to use Generative AI responsibly and make smarter, more ethical decisions.

Keep your policies up to date

AI technology and regulations are constantly evolving, so don’t let your policies become outdated. Make it a habit to regularly review and refresh your generative AI guidelines, ensuring they stay in line with the latest ethical standards, legal requirements, and technological advancements. Staying proactive with updates helps your organization stay compliant and ethically sound as AI continues to transform the business landscape.

Empower your business with Confiz’s gen AI expertise

Generative AI is revolutionizing industries and setting new benchmarks for innovation, making the call for ethical and thoughtful implementation louder than ever. As this game-changing technology becomes mainstream, enterprises face a critical responsibility: using AI in ways that are both safe and responsible.

At Confiz, we understand the complexities of generative AI and the ethical challenges that come with it. With proven expertise in generative AI proof of concepts (POCs), we help businesses identify the right generative AI applications that drive growth and uphold ethical standards. Our approach ensures that your AI solutions are accurate, fair, and trustworthy, setting your business up for long-term success. Let’s talk about how Confiz can elevate your business with ethical generative AI solutions. Reach out to us at marketing@confiz.com today.