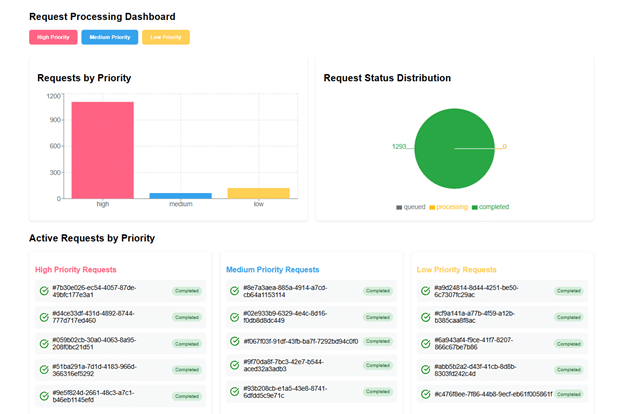

Imagine you’re using Microsoft Dataverse to operate a mission-critical application when suddenly your users are encountering errors like “Service Protection API Limit Exceeded” or “429 Too Many Requests.” Panic strikes, what went wrong? What does the error API rate limit exceeded means and how can it be fixed? More significantly, how can it be avoided in the future?

Welcome to the realm of Service Protection API Limits, a security feature intended to maintain the Microsoft Dataverse platform’s seamless operation for all users. In this post, we’ll cover all you need to know about service protection API limits in Microsoft Dynamics 365, their effects, and how to handle them expertly.

What is rate limiting?

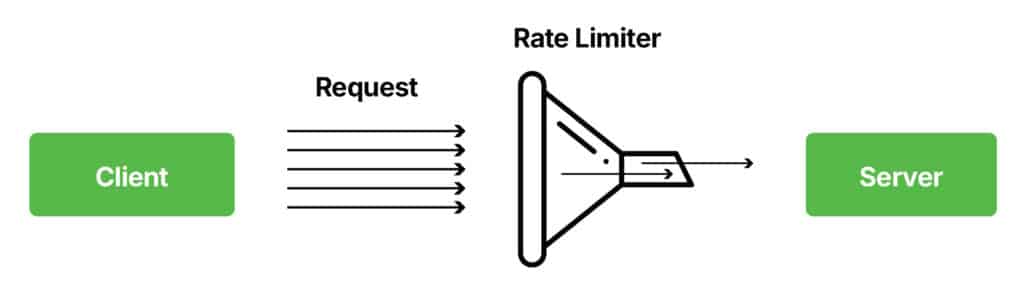

API rate limiting is a way of controlling the number of requests sent to a server. It is important because it helps the server from getting overwhelmed, and it makes sure that everything runs smoothly. If too many requests are made, the server can either say no to the request, give an error message, or take a little time before responding.

Here is an illustration of how it works:

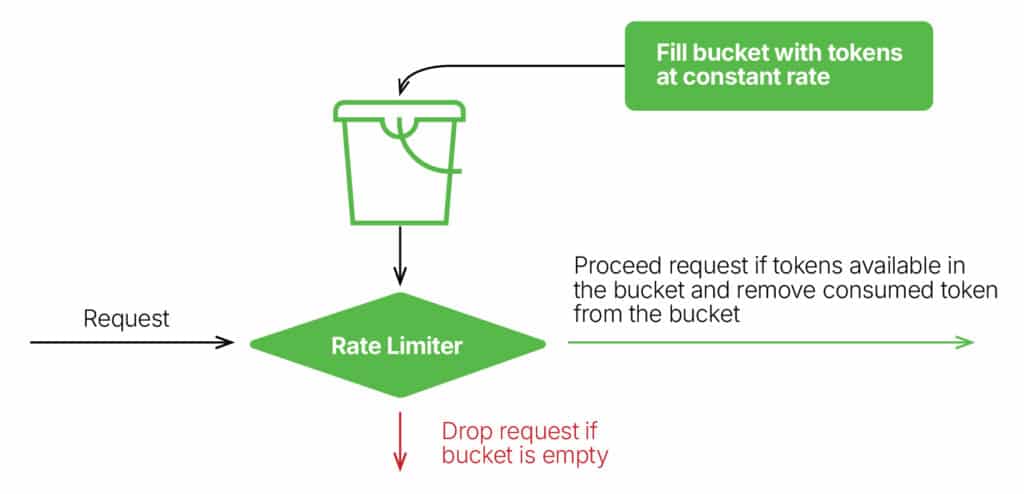

Token Bucket Algorithm: A key rate limiting technique

One of the most common rate-limiting techniques is the Token Bucket Algorithm, used by Amazon Web Services APIs.

Understanding Microsoft Dataverse API limits

Microsoft Dataverse evaluates API limits based on three key factors:

1: Number of requests: Total requests a user sends within a 5-minute window.

2: Execution time: Combine time required to process all requests within a 5-minute window.

3: Concurrent requests: Number of simultaneous requests sent by a user.

These limits are enforced per user and web server, ensuring fair usage across the platform.

Common error messages related to API limits in Dynamics 365

Consider Service Protection API Limits as the Microsoft Dataverse platform’s traffic lights. They safeguard the platform’s availability and performance for all users by preventing any user or application from overloading the system with requests. Microsoft Dataverse reacts with particular faults when an application goes over these bounds:

- 429 Too Many Requests error occurred in the web API.

- Service Protection API Limit Exceeded.

- OrganizationServiceFault fault with distinct error codes in the Microsoft Dataverse SDK for.NET.

Why should you be careful?

Knowing these boundaries is essential whether you’re creating portal solutions, data integration tools, or interactive applications. Ignoring them may result in:

- Errors being encountered by frustrated users.

- Operations were delayed and workflows were disturbed.

- Lower throughput for applications that use a lot of data.

Don’t worry, though; we’ve got you covered. In order to stay within these boundaries, let’s examine the specifics.

Impact on of API limits on different types of applications

1: Interactive client applications

Interactive apps are the face of a business. Interactive apps are used by end-users to perform day-to-day tasks. During normal use, these apps are unlikely to hit API limits, while bulk operations (like updating hundreds of records at once) can trigger errors.

What you can do:

- You can Design your UI to discourage users from sending overly demanding requests.

- You can Handle errors gracefully – don’t show technical error messages to end-users.

- You can Implement retry mechanisms to manage temporary limits.

Pro tip: You can avoid this by using progress indicators and friendly messages like “Processing your request—please wait” to keep users informed.

2: Data integration applications

Data integration apps are the workhorses of their system, handling bulk data loads and updates. Data integration apps are more likely to hit API limits due to their high request volumes.

What you can do:

- You can use batch operations to reduce the number of individual requests.

- You can Implement parallel processing to maximize throughput.

- You can monitor and adjust request rates based on the Retry-After duration.

Pro tip: Start with a lower request rate and gradually increase it until you hit the limits. Let the server guide you to the optimal rate.

3: Portal applications

Portal apps frequently handle requests from anonymous users through a service principal account, as limits are applied per user high traffic can quickly trigger errors.

What you can do:

- You can Display a user-friendly message like “Server is busy – please try again later.”

- You can Use the Retry-After duration to inform users when the system will be available again.

- You can Disable further requests until the current operation is complete.

Pro tip: You can Implement a queue system to manage high traffic and prevent overwhelming the server.

Retry strategies: Your safety net

When you hit a service protection limit, the response includes a Retry-After duration. This is your cue to pause and retry the request after the specified time.

For interactive apps

- Display a “Server is busy” message.

- Allow an option to cancel the operation.

- Avert users from submitting additional requests until the current one is complete.

For non-interactive apps

- Pause execution using methods like Task. Delay or equivalent.

- Retry the request after the Retry-After duration has passed.

Pro tip: Use libraries like Polly (for .NET) to implement robust retry policies. Here’s an example: